Persona Adaptable Strategies Make Large Language Models Tractable

Hattab, G., Anžel, A., Dubey, A., Ezekannagha, C., Yang, Z., & İlgen, B. (2025). Persona Adaptable Strategies Make Large Language Models Tractable. Proceedings of the 2024 8th International Conference on Natural Language Processing and Information Retrieval, 24–31. https://doi.org/10.1145/3711542.3711581.

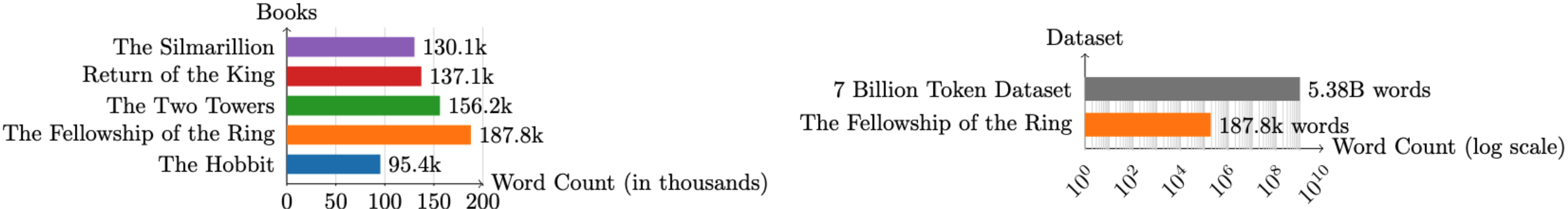

Explainable artificial intelligence for language models varies widely, using techniques like visualization, model-generated text samples, and data analysis. Explanations often overlook the human perspective, leaving the public in the dark about these powerful artificial intelligence systems. Ten persona-adaptable strategies are proposed to help large language models explain their functionality to everyday users. These strategies are designed to be implemented by the model itself and allow it to tailor explanations based on user archetypes, adjusting complexity and style accordingly. The strategies incorporate both technical and human-centered considerations. Technical considerations include the training data, the model architecture used for training, its behavior, and efficiency. Human-centered considerations make the understanding of large language models tractable. These include explaining the sheer volume of text and digital storage a language model requires, the time required to read and computationally process the text, the universality and multilingual properties of the text, the energy required for processing and abstract reasoning, and how the model matches natural language experience. The persona-adaptable strategies serve as a template for the creation of audience-centric explanations powered by the use of large language models. The result can make complex language models more transparent and understandable to the general public.